Why are there so many deepfakes of Bollywood actresses?

sing while scantily clad.

Except neither of those things actually happened.

They are the latest in a string of deepfake videos which have gone viral in recent weeks.

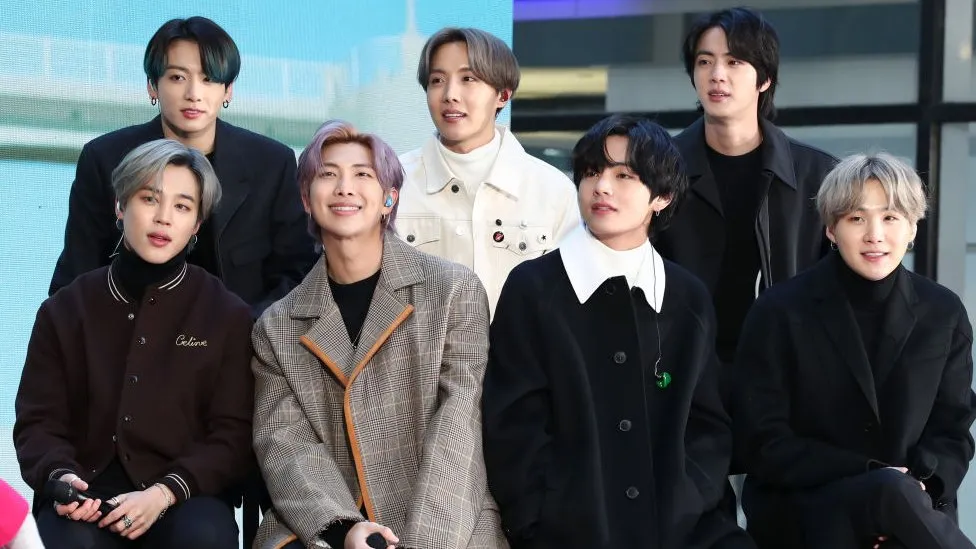

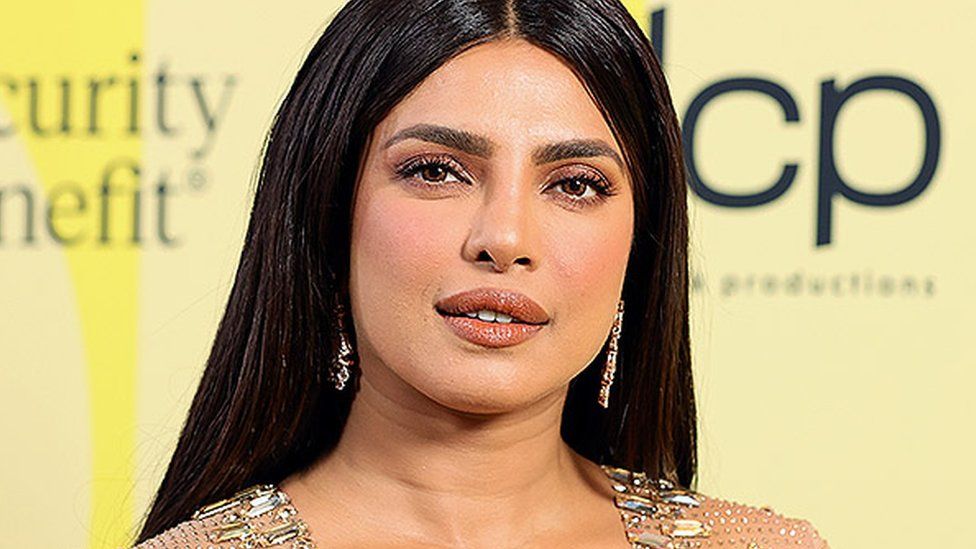

Rashmika Mandanna, Priyanka Chopra Jonas and Alia Bhatt are among the stars who have been targeted by such videos, in which their faces or voices were replaced with someone else’s.

ADVERTISEMENT

Pictures are often taken from social media profiles and used without consent.

So what is behind the rise in Bollywood deepfakes?

- How AI may affect India’s vast film industry

- India actress calls out ‘scary’ deepfake video

- Fears UK not ready for deepfake general election

Deepfakes have been around, and have targeted celebrities, for a long time.

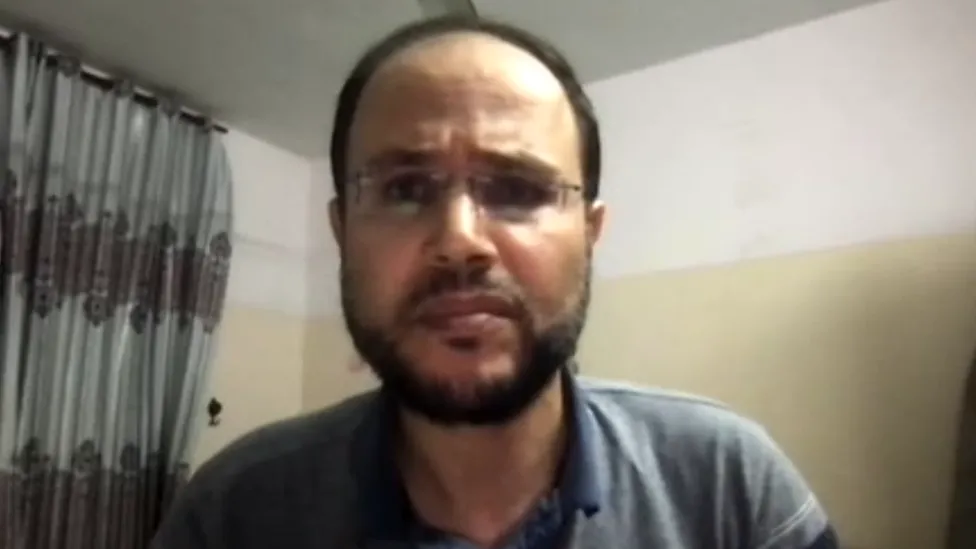

“Hollywood has borne the brunt of it so far,” AI expert Aarti Samani told the BBC, with actresses such as Natalie Portman and Emma Watson among the high-profile victims.

But she said recent developments in artificial intelligence (AI) have made it even easier to create fake audio and video of people.

“The tools have become so much more sophisticated over the past six months to a year, which explains why we are seeing more of this content in other countries,” Ms Samani said.

“Many tools are available now, which allow you to create realistic synthetic images at little or no cost, making it very accessible.”

Ms Samani said India also has some unique factors, including a large young population, heavy use of social media, and “fascination with Bollywood and obsession with celebrity culture”.

“This results in videos spreading quickly, magnifying the problem,” she added, saying that the motivating factor for creating such videos was twofold.

“Bollywood celebrity content makes an attractive clickbait, generating large ad revenue. There is also the possibility of selling data of people who engage with the content, unknown to them.”

‘Extremely scary’

Often, fake images are used for pornographic videos, but fake videos can be made of almost anything.

Recently, actress Mandanna, 27, had her face morphed on to an Instagram video, featuring another woman in a black bodysuit.

It went viral on social media, but a journalist at fact-checking platform Alt News reported that the video was a deepfake.

Mandanna called the incident “extremely scary” and urged people not to share such material.

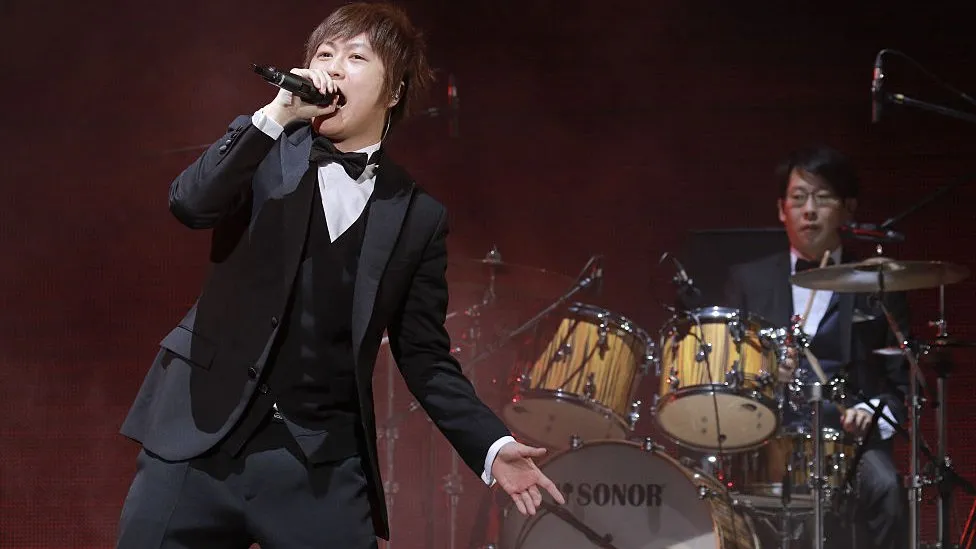

A video of megastar Chopra Jonas also recently went viral. In this case, instead of changing her face, it was her voice that was substituted in a clip which promoted a brand, while also giving investment ideas.

Actress Bhatt was also affected with a video showing a woman, whose face looks like her, making various obscene gestures to the camera.

Other stars, including actress Katrina Kaif, have also been targeted. In her case, a picture from her film Tiger 3, showing her wearing a towel, was replaced with a different outfit, exposing more of her body.

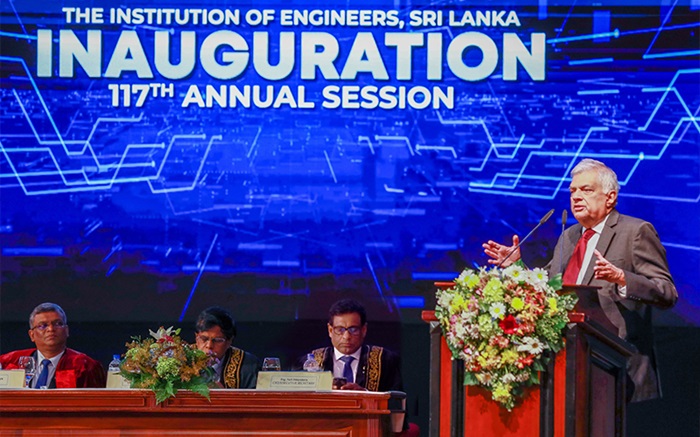

It is not just Bollywood actresses who are affected – others have been targeted recently, including the Indian industrialist Ratan Tata, who had a deepfake video made of him, giving investment advice.

But the trend does seem to be affecting women in particular.

Research firm Sensity AI estimates that between 90% and 95% of all deepfakes are non-consensual porn. The vast majority of those target women.

“I find it terrifying,” said Ivana Bartoletti, global chief privacy officer at the Indian technology services and consulting company Wipro.

“For women, it’s particularly problematic as this media can be used to produce porn and violence images, and, as we all know, there is a market for this,” she added.

“This has always been an issue, it’s the speed and availability of these tools which is staggering now.”

Ms Samani agrees, saying the problem of deepfakes “is definitely worse for women”.

“Women’s worth is often equated with beauty standards, and female bodies are objectified,” she said.

“Deepfakes take this further. The non-consensual nature of deepfakes denies women the dignity and autonomy over the depiction of their bodies. It takes away the agency, and puts power in the hands of the perpetrators.”

Calls for action

As deepfake videos spread, there have been lots of calls for governments and tech companies to get a grip on such content.

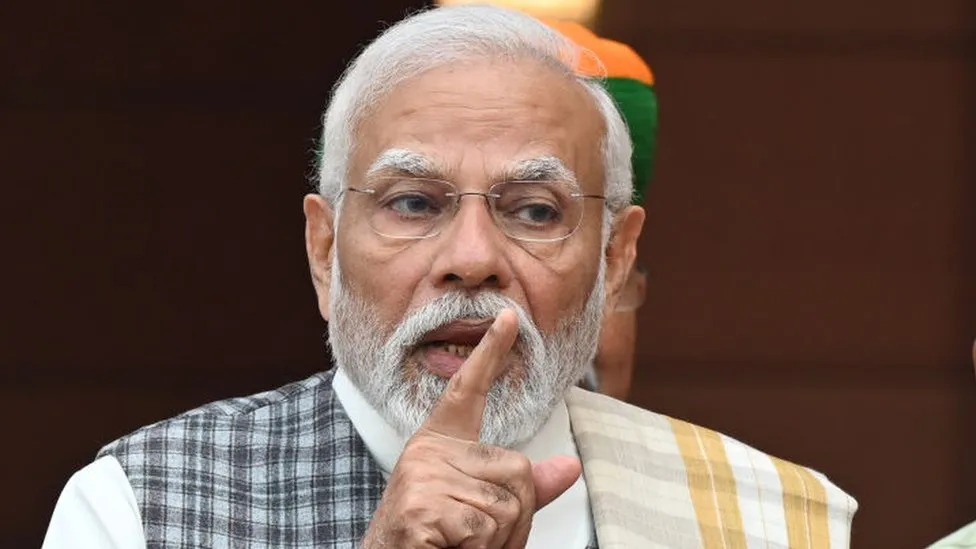

India’s government, for its part, has been cracking down on deepfakes as it heads into a general election year.

After the video of Mandanna went viral, the country’s IT minister Rajeev Chandrasekhar spoke out against deepfakes, saying they were the “latest and even more dangerous and damaging form of misinformation and need to be dealt with by platforms”.

Under India’s IT rules, social media platforms have to ensure that “no misinformation is posted by any user”.

Platforms that do not comply could be taken to court under Indian law.

But Ms Bartoletti said the problem is much wider than just India, with countries around the world focused on tackling this issue.

“It’s not just Bollywood actors. Deepfakes are also targeting politicians, business people and others,” she said. “Many governments around the world have started to worry about the impact deepfakes can have on other things like democratic viability in elections.”

She said social media platforms needed to be held accountable, and should be proactively identifying and taking down deepfakes.

Ms Samani said male allyship also plays “a very important role” in tackling the problem.

“Victims are rightly raising concerns and calling for action, but fewer men are speaking against the issue,” she said.

“There needs to be more support from men.”